Author: KQ7R_Ton_Ngo

Source: Planet OpenStack

If you have installed OpenStack manually, you would have gone through a long sequence of installing and configuring various services and drivers. In every case, these services and drivers run as processes on hosts designated as controllers, compute nodes, etc. When containers became a topic of interest to the community about two years ago, it quickly became apparent that these processes can easily be wrapped in containers. There are several benefits for containerizing the OpenStack services:

1. Filesystem isolation: required packages can be installed freely without modifying the base host and potentially impacting other services. This provides a clean way to add and remove services.

2. Simplified management: common way to start, suspend, stop containers.

3. Easier upgrading and packaging.

Fast forward to the present, there are now several projects that deploy OpenStack cloud where all its services run in containers, and development is continuing to push OpenStack further onto the container platform. In this blog, we will take a quick look at some key aspects of two projects: OpenStack Ansible (OSA) and Kolla. I will take the perspective of a user installing OpenStack, so this is not intended to be an exhaustive comparison of the two projects, although there may be useful information if you are trying to choose between the two. Please refer to the documents of these projects for the actual instructions on using OSA or Kolla.

First, what are the common themes between OSA and Kolla? All OpenStack services and drivers run in containers. But the containers themselves still need to be deployed onto the hosts by some mechanism. Both OSA and Kolla use Ansible to deploy and manage the containers. To give users a quick way to evaluate the tool, both OSA and Kolla provide an all-in-one installation: the full OpenStack is installed onto one server, similar to DevStack. Both assume the hosts to be prepared with certain prerequisites for the containers to work. The prerequisites are documented and there are some utilities to help prepare the hosts, but I found it does take some experimentation to sort out the details and get the environment to the correct starting state. For instance, OSA assumes that a set of network bridges with specific names exists for the containers to connect to.

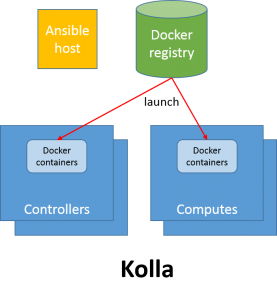

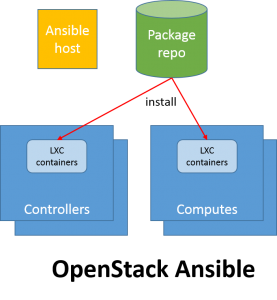

What are the differences? The key difference is in the container technology being used, and this in turn leads to other differences. OSA deploys LXC containers, while Kolla uses Docker containers. Compared to LXC, Docker provides a rich set of utilities to manage its containers, a reason behind its popularity. Kolla leverages these advantages by packaging particular versions of the services into Docker images. Official release versions are available on Docker Hub, or you can build your own.

OSA, on the other hand, creates the containers but treats them more or less as a VM: a private repo is created to serve the installation packages of a particular version of OpenStack, then the LXC containers are created and the software are installed onto the containers.

Since Kolla images are fully pre-built, they are faster to deploy. For an environment of around 100 nodes, Kolla has been reported to install OpenStack in less than 30 minutes, while OSA would take about a day since it would go through the actual installation of the software.

The layering in Docker images also allows for Kolla to support more distros than OSA: Kolla can install onto Ubuntu, CentOS, RHEL and OracleLinux while OSA only supports Ubuntu.

OSA, on the other hand, does derive some benefits for being fully based on Ansible. If the dev-op team is fluent in Ansible, then OSA can be very appealing. When a user needs to troubleshoot a problem, understand the steps being done, or make some customization, the playbooks are readily available on the system. In this respect, OSA does offer more flexibility in configuring and managing the cloud.

What’s next for OpenStack in containers? Currently Ansible is used to deploy and manage the containers. However, container platform such as Kubernetes offers a rich set of functionality to manage containers, so the natural question is whether it makes sense to let Kubernetes manages the OpenStack containers themselves. Although this may seem convoluted, there are benefits to this approach. Kubernetes can ensure high availability of the containers, and can provide rolling upgrade of OpenStack services. However, one gap to be filled is that Kubernetes’ descriptor for container is not quite an orchestration language since it does not describe dependencies to express a sequence of actions. This functionality will need to be provided elsewhere.

Kolla is embarking on this development in the Ocata release: running OpenStack within Kubernetes. Check back later for an update when this new tool is available.

The post OpenStack in containers appeared first on IBM OpenTech.

Powered by WPeMatico